We’re living through exciting and uncertain times.

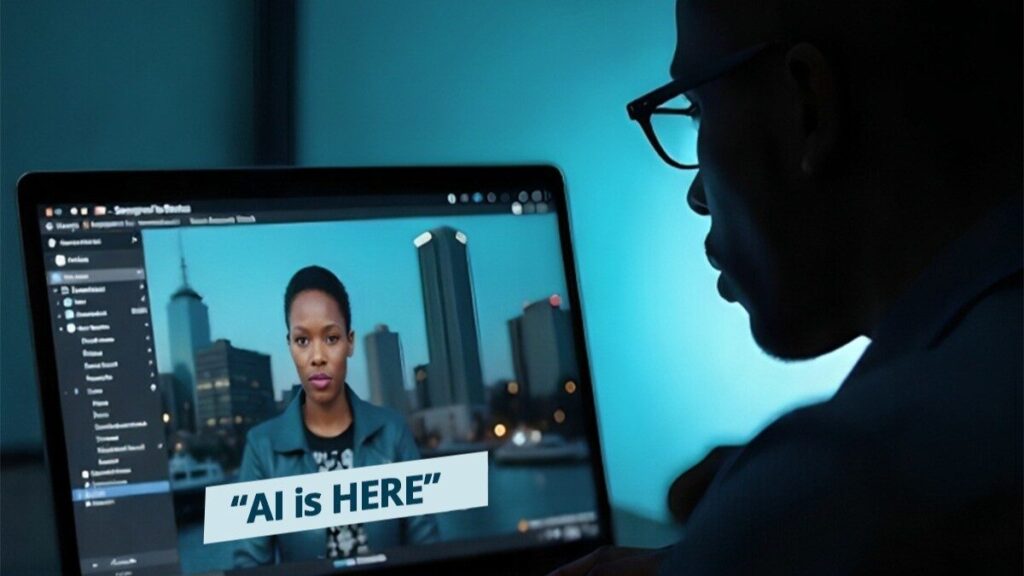

Recently, Google’s CEO unveiled updates to its AI offerings, including Veo 3, capable of generating scenes so realistic they appear to have been shot with actual cameras. The line between what’s real and what’s AI-generated is now almost indistinguishable. Early users have already begun showcasing remarkable creations. One example? A fully fabricated conference, complete with interviews, participants of every demography, and content so rich you’d think it actually happened. Just two years ago, we were laughing at AI-generated hands with six fingers. Now? we’re watching fully believable human motion, speech, and body language, across races, ages, and expressions, emerge from pure code.

From filmmaking to marketing, the implications are enormous. The cost-savings potential is huge: organizations now need fewer large production budgets and more creative minds who know how to prompt AI tools, alongside powerful graphic machines and premium GenAI subscriptions. This excites me a whole lot, however, I can't help but ask: what does this mean for truth? For verification? For trust?

This development takes Thussu’s reflections on the mediated nature of reality[1] to a whole new level. Even before this, what actually happened and what the media says happened were often not the same, influenced by editorial bias, media agendas, and power structures. Now, the situation is even more complex. This article isn’t about media theory per se. But it’s important to highlight that media institutions now have an even greater role to play in combating misinformation and disinformation. In Nigeria, we’ve only just begun gaining momentum with media and information literacy efforts, encouraging both youth and adults to think critically and verify information before sharing it, but now, AI arrives, advancing at a breakneck speed and where seeing was once believing, that may no longer be enough. The traditional media’s value has always been in its credibility, even if not always first to break a story. But if AI can replicate a news anchor’s face, voice, tone, and even the studio set, and distribute fabricated news via digital platforms... how will the average person know what’s real? What will be the new tell-tale signs?

Thinking in Solutions - We are increasingly at the mercy of ethics.

- Should news organizations allow their likenesses to be fed into AI models?

- Should their broadcast content be used to train generative systems?

- To what extent should media houses themselves adopt GenAI tools in their workflows?

Really, what stops a malicious actor from capturing those very elements, tweaking them with AI, and pushing false narratives?

Consider the recent case with OpenAI’s Sora and Studio Ghibli. Ghibli's creative works were featured in AI-generated content without the creator’s consent. Though OpenAI removed the material, the internet still holds onto those AI-generated designs. Once released, there’s no real rollback. News agencies should consider restricting the use of their studio likenesses, anchors’ images, and content archives, especially in AI training sets. Could that be a workable ethical measure? What other safeguards are possible, and which ones should Nigeria and other African nations begin adopting now?

Final Thoughts

As we innovate fast, we must also pause to think deeply. Every new AI tool makes life easier and opens up spaces once limited to a few, but with that power comes significant risk. Lately, I’ve been thinking about how we approach these risks. And it wasn’t until we engaged sistemaFutura's Stephen Wendell for a week-long learning workshop on behavioural systems thinking at PIC that I began to see how systems thinking could become more than just a buzzword to being a practical approach to designing innovation with awareness of long-term impact.

The way AI is reshaping media, truth, and trust isn’t just a tech issue; it’s a systems issue. Perhaps frameworks like systems thinking can help us hold space for complexity, unintended consequences, and ethical responsibility. Without that kind of structured reflection, the societal fallout from “unchecked” innovation may go far beyond digital confusion; it could quietly and permanently reshape how we perceive reality itself.

By Omofuoma Agharite, Abstract Comms Lead